More and more websites are using javascript framework.

Although GoogleBot frequently executes Javascript, SEOs quickly realized that it was better to send HTML code to robots if they wanted to continue to have good results.

Several technical solutions are possible but concretely it comes down to execute JS code on the server side and not on the client side.

The application will identify the robots of the visitors and will send HTML rather than JS. Yes, this is cloaking but it is officially authorized by Google in this case. It will be a simple cloaking on user agent.

However, imagine for a second if your server no longer sends HTML to the bots. Your entire visibility could collapse.

Where it’s quite dangerous is that visually, it will be impossible to notice the problem because your browser will continue to run JS

How to limit the risks?

Waiting to lose your rankings? Too risky

Check manually every day? Too time-consuming

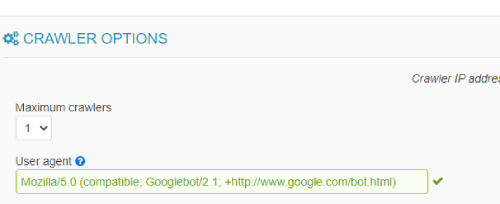

The solution? Configure in 2 seconds the Oseox MONITORING bot with the GoogleBot user-agent.

Our crawler does not execute Javascript, so if your prerender fails, you will automatically receive a notification within the hour and thus be able to react before GoogleBot even notices it.